NVIDIA B200: The New Standard GPU for the Era of Large-Scale AI

Elice

7/14/2025

1. The Era of Giant AI Models: A New Infrastructure Challenge

GPT-4, LLaMA 3, Claude 3 Opus—AI models with billions or even trillions of parameters are no longer exceptional.

But traditional GPU server infrastructure is hitting its limits:

- Insufficient memory requires model sharding

- GPU-to-GPU communication bottlenecks

- Inadequate real-time inference speed

- Rising power consumption and costs

Simply adding more GPUs no longer solves the problem.

What we need is a reimagining of AI infrastructure itself.

And NVIDIA’s answer is clear: the B200 GPU.

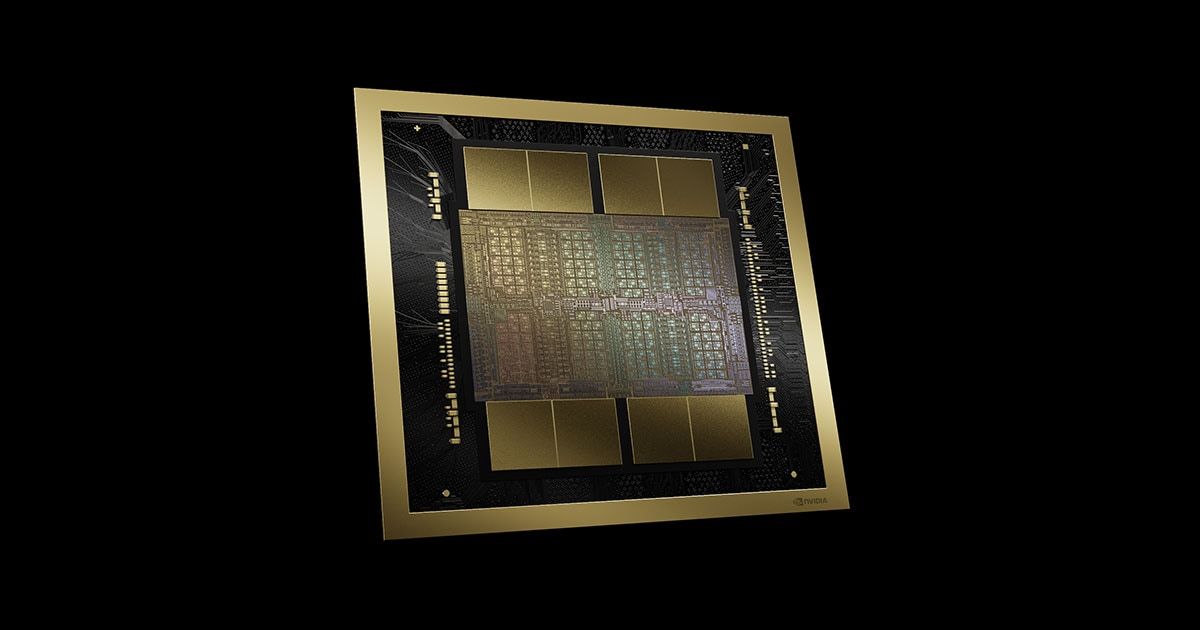

2. Introducing the NVIDIA B200: Powered by the New Blackwell Architecture

The NVIDIA B200 is a next-generation GPU based on the cutting-edge Blackwell architecture.

Compared to the previous-gen H100, the B200 offers:

- More memory

- Faster interconnects

- Greater efficiency

Quick Specs: NVIDIA B200

| Category | Spec |

|---|---|

| Architecture | Blackwell |

| GPU Memory | 180GB HBM3E |

| FP8 Training | 72 PFLOPS (across 8 GPUs) |

| FP4 Inference | 144 PFLOPS (across 8 GPUs) |

| NVLink Bandwidth | 1.8 TB/s |

| Form Factor | SXM6 |

| Use Cases | Giant LLM training, ultra-low latency inference, AI simulation |

3. H100 vs. B200: A Leap in Performance

| Feature | H100 | B200 |

|---|---|---|

| GPU Memory | 80GB | 180GB |

| NVLink Bandwidth | 900 GB/s | 1.8 TB/s |

| FP8 Performance (1 GPU) | Up to 4 PFLOPS | Up to 18 PFLOPS |

| LLM Efficiency | Model sharding | End-to-end within a single instance |

The B200 delivers up to 4.5× faster inference and 2.5× faster training compared to the H100.

This is a breakthrough, not just an upgrade.

4. Real-World Benchmark: Over 1,000 TPS with LLaMA 4 Maverick

According to NVIDIA’s official benchmark,

B200 GPUs achieved 1,038 tokens per second per user using Meta’s LLaMA 4 Maverick.

Why this matters:

- No more chatbot delays

- Real-time LLM for search and assistants

- Scalable inference for thousands of concurrent users

5. Should You Switch to B200? Check These Boxes First:

The B200 isn’t just a high-performance chip—it’s the solution for infrastructure bottlenecks.

Here’s who should consider it:

| Who Should Switch | Why |

|---|---|

| Giant LLM developers | Can train and infer GPT-4-scale models efficiently |

| Generative AI / SaaS | Lower latency and reduced operating costs |

| Research labs / Universities | Large-scale experimentation and fine-tuning |

| Biotech / Manufacturing | Real-time simulation + generative AI deployments |

| Cloud providers / AI farms | Robust backend for public LLM services |

6. Release Timeline and Early Adoption in Korea

- Announced: GTC March 2024

- Global Availability: Early 2025

- Korean Debut: February 2025 via AdTech firm PYLER

- Use case: Real-time video analytics and AI-powered ad targeting

- Result: Over 30× performance improvement over previous setup

The B200 is still in early adoption, but major Korean enterprises and public labs are moving quickly.

7. Two Flexible Ways to Use NVIDIA B200 with ELICE

ELICE helps you adopt B200 hardware without friction—whether you’re a startup or a data center operator.

Here are your options:

Option ①: Cloud-Based B200 GPU Instances

B200 GPUs available on-demand via ELICE Cloud:

- No upfront hardware cost

- Includes NVIDIA AI Enterprise suite

- Flexible scale-up as workloads grow

- End-to-end support for training, fine-tuning, inference

Recommended For:

- Startups or research teams on a tight budget

- Short-term AI projects needing powerful GPUs

- Teams that want flexibility without building infrastructure

Option ②: Modular AI Datacenter (PMDC) with B200 Inside

ELICE provides PMDCs (Portable Modular Data Centers) equipped with B200 GPUs.

Why choose PMDC?

- Fast setup: Operational in 3–4 months

- Lower total cost: Up to 50% TCO savings

- Scalable: Add GPU modules or blocks as needed

Recommended For:

- Enterprises needing on-prem infrastructure

- Public agencies with strict cloud policies

- Organizations with long-term AI roadmaps

Want to Experience the B200 for Yourself?

We’ve prepared a special B200 promo to help you get started.

- #elice cloud

- #NVIDIA B200